Cerebras open sources seven GPT-3 models from 111 million to 13 billion parameters. Trained using the Chinchilla formula, these models set new benchmarks for accuracy and compute efficiency.

About Cerebras-Gpt : Cerebras-GPT is a family of open, compute-efficient large language models. Cerebras has open-sourced seven GPT-3 models with 111 million to 13 billion parameters, trained using the Chinchilla formula for improved accuracy and efficiency.

Drag-and-drop editor for effortless design

Advanced content generation capabilities

Effortless data integration

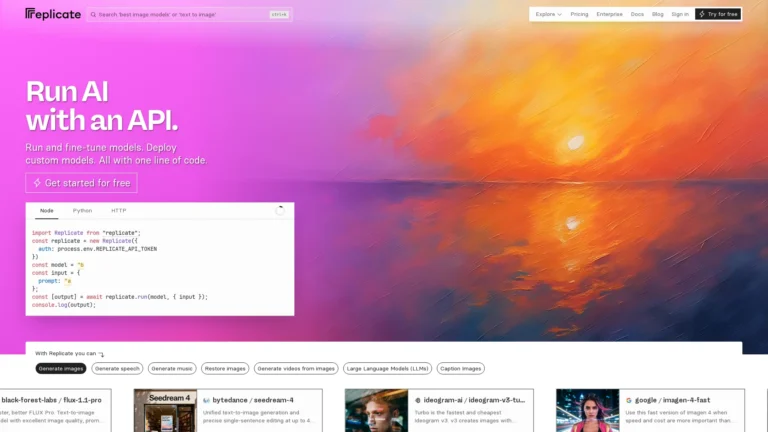

Run open-source machine learning models

Supports a network of multiple language models for diverse outputs

Content generation for conversational AI bots

Use ChatGPT directly on the website or YouTube

Automation of coding, debugging, and testing